Week 1 taught you to sharpen the ask.

Week 2 gave you experts on demand.

Week 3 showed you how to teach through examples.

Week 4 forced your AI to think step by step.

But none of it matters if AI is lying to you.

It fabricates. Boldly.

Fills gaps with nonsense. Frequently.

It will confidently tell you that Barack Obama was born in Kenya.

So if you’re trusting AI to write reports, summarize research, or generate insights without fact-checking—you’re playing with fire.

This week, we’re fixing that.

The PromptTANK ™’ 8-Week Sprint is designed to rewire your thinking about AI prompting.

Each week:

🎯 1 core principle

⚔️ 1 real-world challenge

🚫 No fluff. No gimmicks. No upsells.

LLMs don’t “know” facts—they predict text based on probability. The good news is, AI can fact-check itself—if you tell it to.

Trust, But Promptify: 3-Step PromptTANK™ Truth Finder

1️⃣ Demand Sources Upfront

AI needs to cite sources as it generates an answer, not as an afterthought.

👉 "Provide an answer using only verifiable sources. Include citations with working links where possible." (Forces it to only use sources it can verify.)

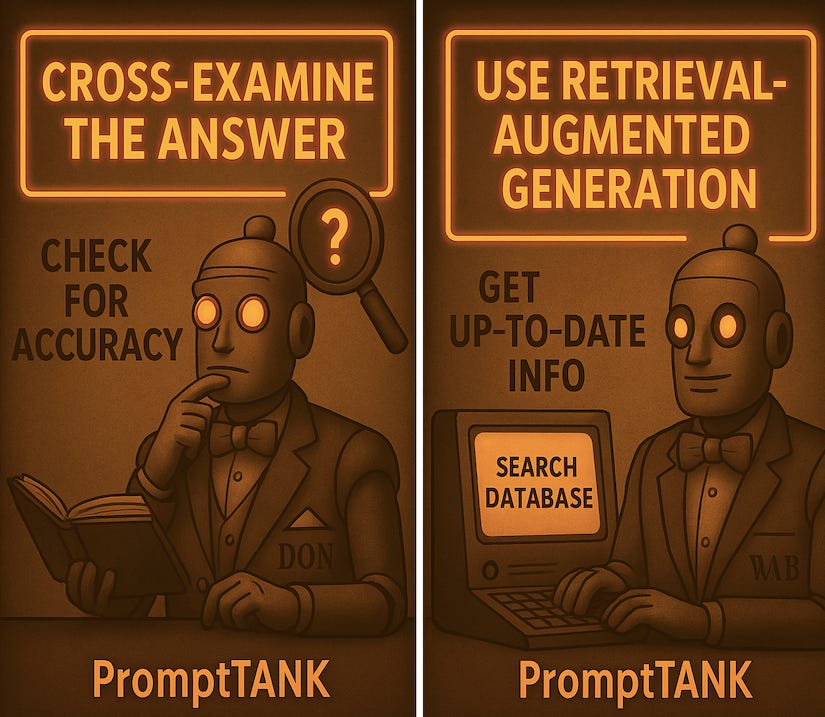

2️⃣ Cross-Examine the Answer

AI will always sound confident—even when it’s wrong. Challenge it.

👉 "What sources did you use to generate this response? Are any of them unverifiable?"

👉 "Double-check your response. Are any claims uncertain or unsupported by evidence?" (This makes the model self-check for hallucinations.)

3️⃣ Use Retrieval-Augmented Generation (RAG)

If you need real, up-to-date, and sourced information, don’t just rely on AI’s internal model. Use RAG: AI combined with a live database or document repository. Instead of guessing, AI retrieves real, factual data.

👉 "Search [trusted database] for verified sources before answering. Use only retrieved data."

(Works on AI systems connected to real-time retrieval—like Perplexity AI, ChatGPT with web access, or custom GPTs with document uploads.)

🔴 BAD Prompt:

"Summarize the latest Alzheimer’s treatments."

(AI generates a mix of real and hallucinated research.)

🟢 GOOD Prompt:

"Summarize the latest Alzheimer’s treatments only using verifiable sources. Provide citations and include any uncertainties in your response."

(Now it must back up its claims or admit gaps in knowledge.)

🔴 BAD Prompt:

"What’s the average salary for a software engineer?"

(AI pulls outdated or made-up numbers out of its robotic ass.)

🟢 GOOD Prompt:

"Retrieve the most recent average salary for software engineers from the Bureau of Labor Statistics or LinkedIn Salary Insights. Provide a link to the data source."

(Forces AI to use real-world numbers, not guesses.)