Week 3: Show, Don’t Tell – How to Train AI with 2 Examples

Your AI isn’t confused. It’s under-trained. Here’s how to get elite output without fine-tuning, coding, or guessing.

The PromptTANK ™’ 8-Week Sprint is designed to rewire your thinking about AI prompting.

Each week:

🎯 1 core principle

⚔️ 1 real-world challenge

🚫 No fluff. No gimmicks. No upsells.

👋 Welcome back. Week 1 showed you how to stop sounding like a résumé generator and start structuring prompts for clarity and impact. Week 2 taught you to stop describing the output and start defining the expert.

This week, we shift from who to how.

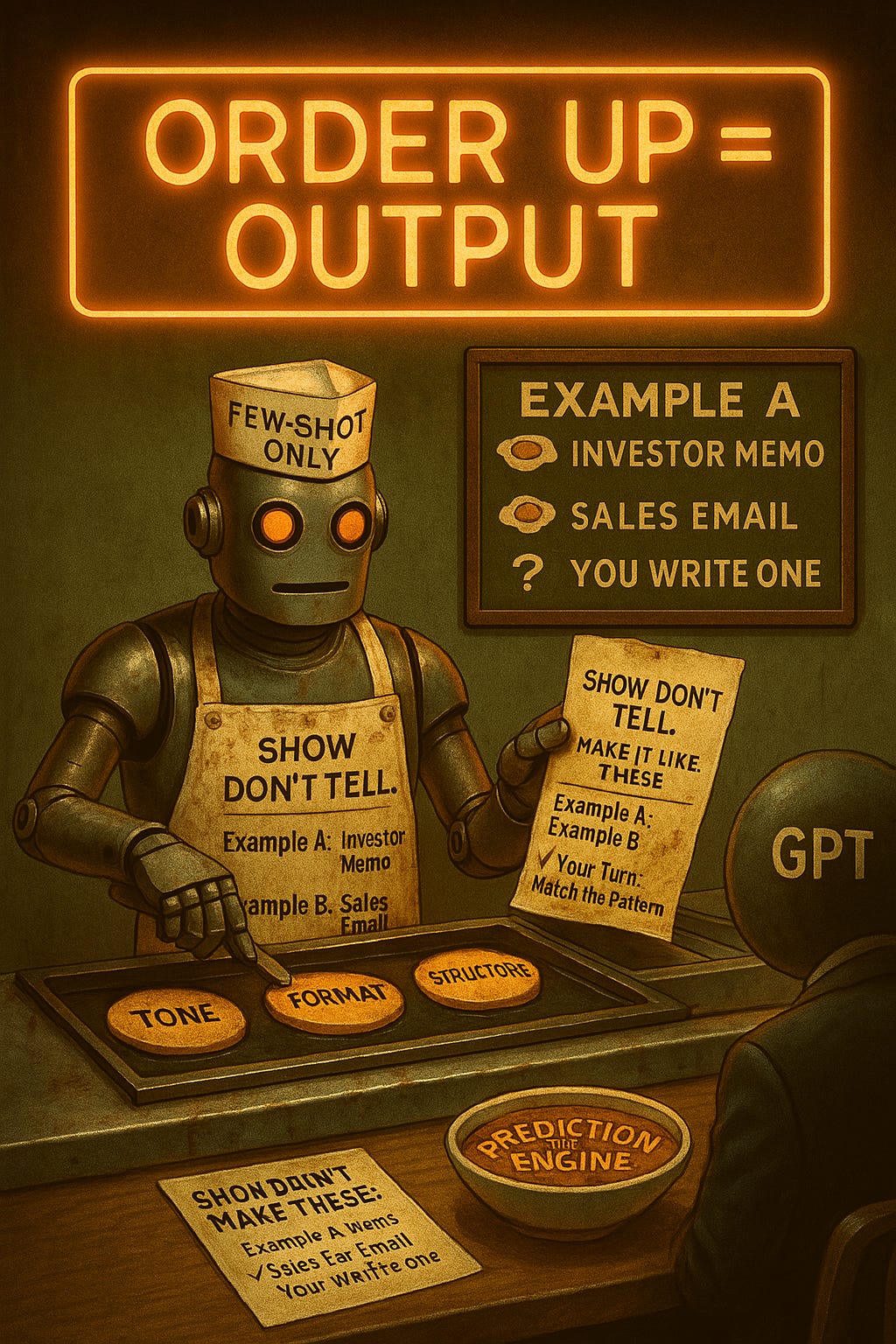

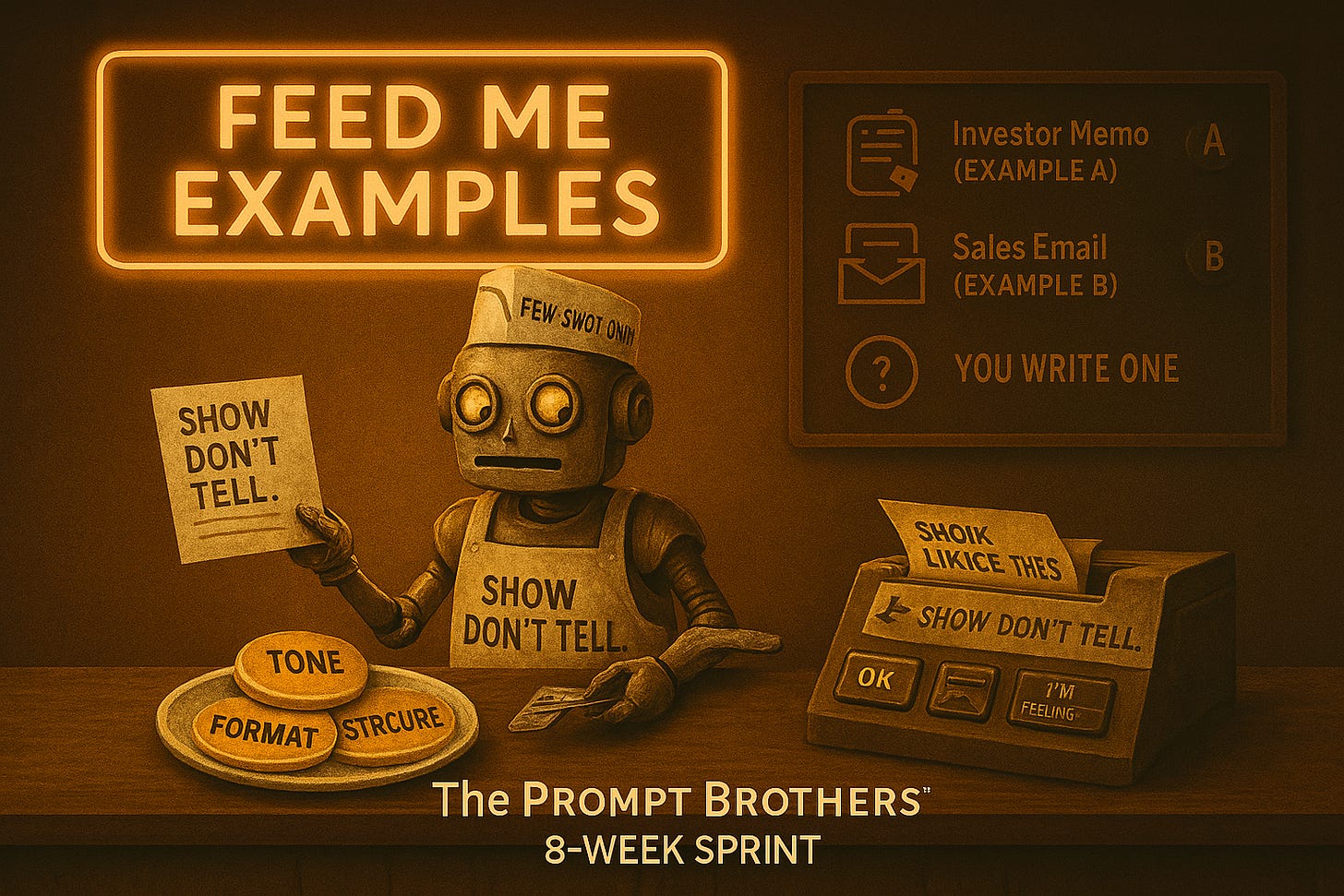

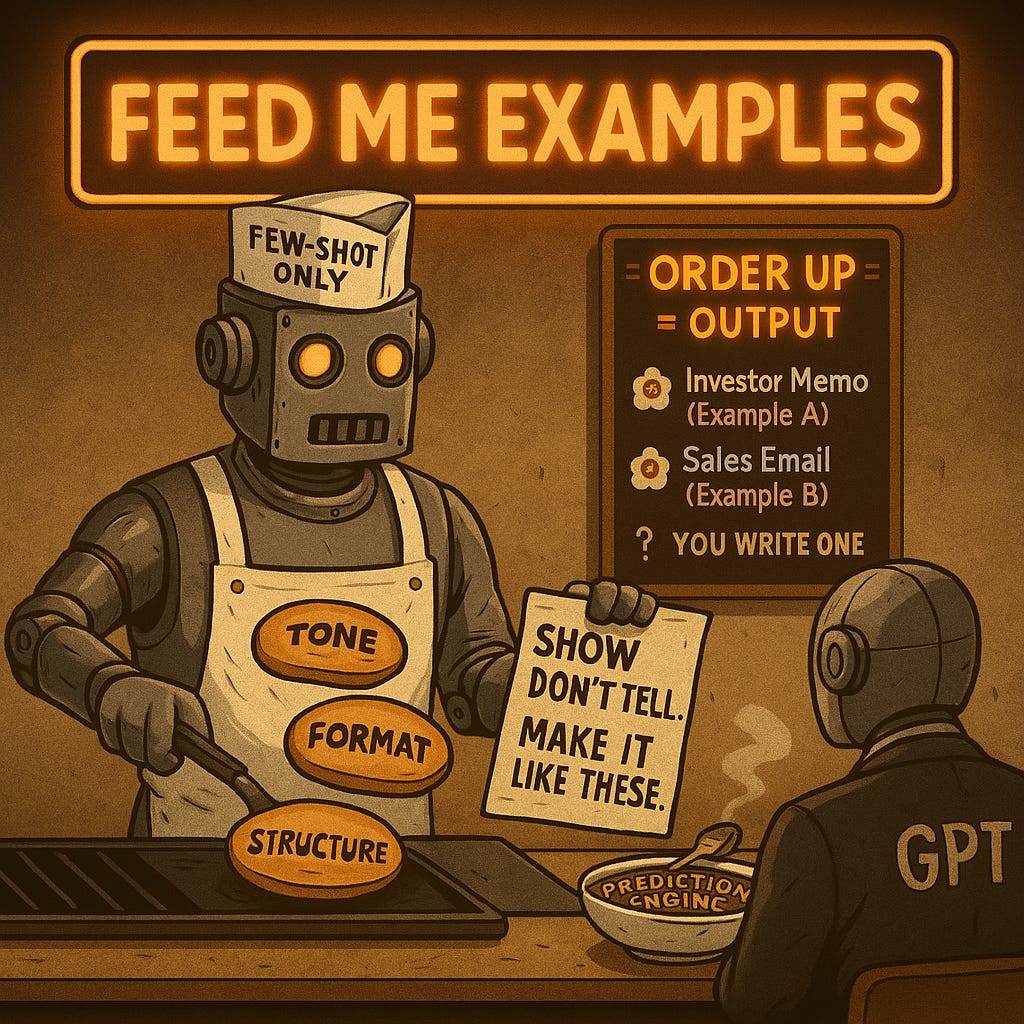

Most people write a prompt, hit send, and hope the AI “gets it.” It doesn’t. It predicts patterns—and you control those patterns with examples. If you're not getting top-tier output, the model isn’t broken. You're just flying blind.

💡 The PromptTANK™ Laws of AI Precision 💡

Your prompt safety net—don’t skip it.1. Clarity over cleverness. Your input is not a riddle.

2. AI is a prediction engine, not a mind reader. Feed it structure.

3. Vague in = vague out. Always.

4. Context is leverage. Attach anything that sharpens the ask.

5. Constraints are freedom. Define what matters—and what doesn’t.

6. Outcome beats output. Ask for results, not rambling.

7. Roleplay unlocks expertise. “Act as” is your cheat code.

8. Examples train the model. Show before you tell.

9. Break big asks into steps. The chain of thought wins.

10. AI doesn’t fact-check itself. You have to. Always keep a human in the loop.

🧠 WEEK THREE || Examples Train the Model

👶 8th-Grade Explainer

Imagine hiring a wicked smart intern. You could hand them a dense, soul-crushing 5-page SOP… or you could slide over two good examples and say, “Just do it like this.” Boom—instant understanding. Minimal confusion. Maximum output.

AI’s the same.

One example calibrates it. Two? Now it’s thinking like you.

⚙️ Why This Matters to Leaders

Demonstrate it. Examples teach the model how to behave—tone, structure, logic—without you having to spell it out. One good pattern beats 100 bad instructions.

🧪 Examples in Action

🔍 Real-world tip: You can include your examples inline in the prompt or attach them as files (PDFs, spreadsheets, past memos, etc.). Just reference each one.

Example 1: Sales Email Follow-Up (B2B SaaS)

Prompt: “You’re a B2B SaaS sales director. [see PromptTANK week 2: Persona Pattern]. Review the two attached follow-up emails. Based on these, write a third follow-up email for [insert prospect info]. Match tone and format.”

💡 Why this works: The model didn’t guess your voice. It inferred it from your attached examples.

Example 2: Executive Summary (Investor Deck)

Prompt: “Refer to the attached investor summaries. Based on these examples, draft a new Q2 summary for our latest pitch deck. Match the structure, brevity, and investor-first tone.”

💡 Why this works: You didn’t explain “tone” or “focus”—you showed it.

Example 3: Annual Performance Review (People Ops)

Prompt: “See two past performance summaries attached. Based on these, write one for Taylor. Use the same cadence, structure, and balanced tone.”

💡 Why this works: The format and framing are baked into the pattern. The model simply followed it.

🧱 Prompt Template:

“Here are two examples of [type of writing]. Based on them, write one for [context]. Match tone, style, and structure.”

✳️ Use the attached files or paste the examples inline. Just make them specific.

Once you show the model how to think, you’ll never settle for generic instructions again.

🗺️ Sprint Map: Where We’re Going

✅ Week 1: Clarity = Impact

✅ Week 2: The Persona Pattern

➡️ Week 3: Examples Train the Model

🔜 Week 4: Constraints Create Freedom

🔜 Week 5: Break It Down to Build It Right

🔜 Week 6: Outcome > Output

🔜 Week 7: Act Like You Own the Place

🔜 Week 8: Precision at Scale

🧭 Up Next — Week 4: Constraints Create Freedom

Structure doesn’t limit creativity—it sharpens it. We’ll show you how to box in chaos and get better results, faster. You’re not just getting better at prompting. You’re building a system that scales the best thinking.